The Artificial Intelligence (AI) research community is just beginning to demonstrate what is possible. DOE is providing testbeds to help researchers explore novel hardware, software, and algorithms so that the future of AI is faster, more efficient, safer, and secure.

Artificial Intelligence (AI) technology has the promise to benefit society in countless ways. The U.S. AI industry is only at the start of exploring the huge potential of AI systems with the future needing higher performing, more efficient systems that offer increased safety and enhanced security. Consistent with the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, the government is focusing efforts to unlock these benefits while understanding, evaluating, and mitigating risks of AI models and systems. DOE is using its network of national laboratories to partner with U.S. industry and academia to explore sophisticated technologies that will continue the rapid development of AI.

One approach that the department has taken over the past decades has been to develop and deploy “testbeds” – next-generation computing systems of various sizes, specifically designed for research and to support rigorous, transparent, and replicable testing of hardware and software capabilities. DOE’s family of testbeds are already being used to explore a diversity of research questions, including how the future of AI hardware may evolve to be more efficient and how the risks associated with the use of AI can be effectively managed and secured.

AI Testbeds Key Facts

There are dedicated AI testbeds at 7 National Labs. These testbeds:

- Support AI hardware development and testing, reliability testing, and application development for DOE’s larger-scale production computing facilities.

- Range in size from single processors up to systems with hundreds of nodes.

- Explore different computing architectures including CPU, GPU, and heterogeneous system types; accelerators; different interconnect types; different memory architectures; and different security levels.

- Complement a larger ecosystem of research infrastructure that includes computational testbeds and energy systems testbeds.

Teams at DOE are also using testbeds at the national laboratories to perform red teaming of AI models. The testbeds provide a neutral platform which can host sensitive data and the large-scale, GPU-enabled systems can process substantial AI computations. Results from these tests help AI developers, academics, and policymakers better understand the capabilities and performance of AI models, ultimately leading to iteratively safer, more secure systems, and a stronger AI ecosystem overall. DOE is making its testbeds and computing resources available to several government agencies and is collaborating on red-team testing and evaluation of the risks that AI systems pose to public safety, economy, society, weapons of mass destruction, and cyber security.

DOE’s testbeds also help support research on Privacy Enhancing Technologies (PETs), a family of tools that enable large-scale data processing while protecting confidentiality, sensitivity, and privacy aspects of the data. PETs are a key component of future trustworthy AI deployments. DOE’s large-scale computational capabilities, combined with the ability to store and process sensitive data, allow development and testing of PETs on both real and synthetic data. DOE's Office of Science partnered with the National Science Foundation to launch a Research Coordination Network dedicated to enhancing privacy research for AI and DOE will collaborate with the U.S. AI Safety Institute to develop and evaluate PETs and to develop guidelines for their deployment.

FAQs about DOE's AI Testbeds

DOE partners with a wide range of hardware vendors and system designers to design and deploy novel testbeds to explore a wide range of innovations. No single computer architecture is fully optimized for all of DOE’s workloads because our national laboratories utilize many different types of algorithms and applications to meet our science, energy, and security missions. By deploying multiple types of testbeds, researchers can identify what characteristics of each system provide the greatest performance and energy efficiency.

Some of DOE’s testbeds are available for open research through the host DOE national laboratories; other testbeds are made available through collaborative research with other Federal agencies, academia and U.S. industry. Many testbeds are specifically established to provide access to the open research community. DOE and its vendor partners gain deep value from the exchange of ideas that occur through testbed research projects, accelerating the progress of innovation. For more information on how to collaborate with DOE, see the links below.

Researchers at DOE routinely publish ground-breaking and world-leading research papers, laboratory reports and presentations. Whenever possible, DOE makes much of its research available to the public. More than three million research artifacts published by DOE can found at the Office of Scientific and Technical Information.

DOE deploys testbeds and computing systems for every category of information which is used across our mission space, including open science, controlled unclassified information, and classified data. This allows us to protect national security while still engaging in the important work of identifying the fastest and more secure AI hardware for our work.

Learn more about DOE's AI Testbeds

Testbeds at the national labs

Architectures available at doe testbeds

DOE has partnered with Cerebras since the introduction of its first Wafer-Scale Engine AI systems. Systems designed by Cerebras utilize the world’s largest processors to accelerate AI training. There are multiple Cerebras systems installed at DOE’s national laboratories.

Groq produces processors which are optimized to deliver fast AI inference – the process of running prompts against an AI model. DOE is investigating the use of Groq chips in production.

SambaNova produces systems which perform AI training and fast inference. DOE has been a partner with SambaNova for multiple iterations of its products and has a number of systems deployed at the national laboratories.

DOE was one of the first agencies in the world to use NVIDIA GPUs to perform high-performance scientific calculations. Thousands of NVIDIA GPUs are installed across DOE’s supercomputers and AI testbeds. The latest system, Venado, deployed at Los Alamos National Laboratory in New Mexico, contains NVIDIA’s latest Grace/Hopper superchips, allowing DOE researchers to evaluate performance of hybrid HPC/AI algorithms.

AMD GPUs and APUs (Accelerated Processing Units) are used by DOE in its Exascale supercomputers. Frontier, installed at Oak Ridge National Laboratory, and El Capitan, installed at Lawrence Livermore National Laboratory, are currently among the fastest computers in the world. DOE and AMD have partnered for multiple decades, helping to codesign novel features for processors, GPUs and now APUs.

Intel Data Center GPUs are deployed in the Aurora Exascale supercomputer installed at Argonne National Laboratory. Aurora is among the largest computers in the world and is being used to develop large-scale AI model training algorithms.

The Intel Loihi neuromorphic processor is an ultra-low power chip which models neuron activation in the brain to perform learning and AI functions. DOE is partnering with Intel to investigate the potential energy efficiency of using neuromorphic chips in the future.

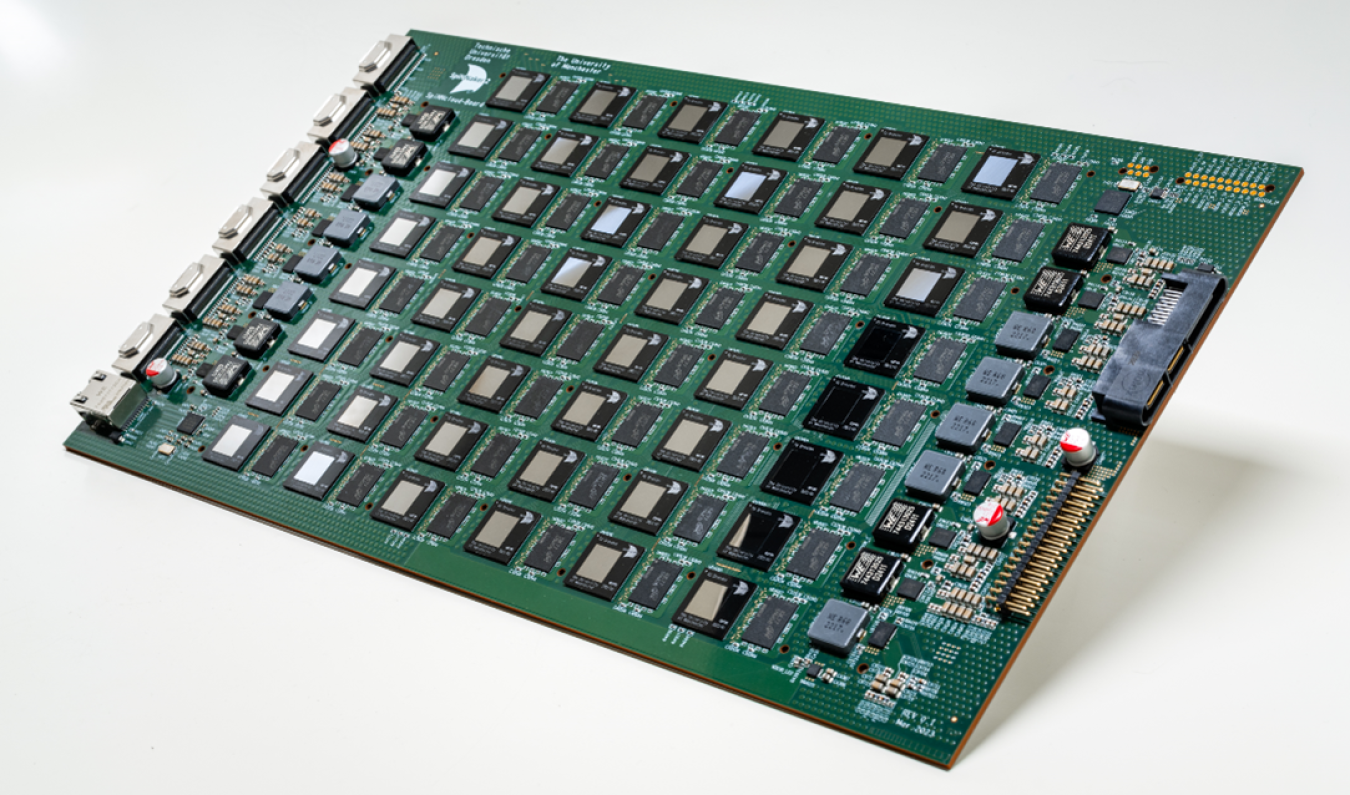

SpiNNaker is a brain-inspired processor which models the behavior of neurons. The processor uses low power cores to recreate neuron behavior. DOE is partnering with SpiNNCloud, the developer of SpiNNaker chips to evaluate the potential uses for the technology in the future.

NextSilicon chips can dynamically reconfigure themselves to replicate HPC and AI algorithms. The reconfigurability has the potential to increase processor performance and reduce energy consumption. DOE has partnered with NextSilicon to port and evaluate algorithms on reconfigurable hardware.